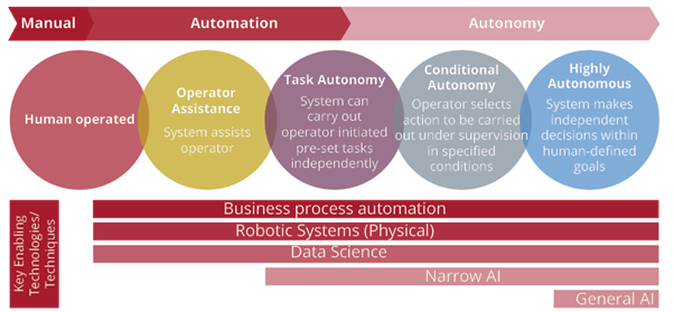

Artificial Intelligence is an evolving toolset that is becoming prevalent across the IT sector. But is it right for your organisation, should you adopt it and how can you do so safely? AI invokes the idea of fully automated decision making, freeing up time for people to do the tasks that computers cannot. However, AI should be viewed as a continuum of tools, on a scale that allows more, or less autonomy depending on the task you give it. For example, predictive text has been around since 1995 and only gets better with every release. It allows us to type with abandon to have our spelling corrected, or to prompt us for the word it expects us to write. However, all the decision-making rests in the hands, or fingers, of the user. Another familiar example would be the use of facial recognition. Many phones enable this feature; you no longer have to hold your face to a specific distance, angle, without glasses, in good light to be recognised. Instead, you glance at the camera and the phone recognises the user. This is a good example of using autonomous biometric analysis to “decide” if the similarities between photos is good enough to provide a positive match. At the other end of the scale, you have an AI who is making decisions that can impact many people’s lives – the common example being self-driving cars. It builds on semi-automated driving projects by the US DARPA 1984, and technology embedded in highways in 1991; to successfully apply AI to the problem in 2017 when Waymo trialed the first fully automated car. Image source: MoD, Defence Artificial Intelligence Strategy (June 2022), p 4: https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/1082416/Defence_Artificial_Intelligence_Strategy.pdf  How can I use AI in my organisation? When it comes to using AI within your organisation you need to approach it as you would any new tool: What is the problem to solve? What am I trying to improve? What value does it provide? How will I mitigate risks? Where you have initiated a change within your organisation for some improvement or problem solving, solid business analysis of your current state regards people, processes and technology will give huge gains in planning to implement AI. Once you know what you need, the tool selection is where you will need to work closely with your Leadership Team and Board to ensure they understand the risks, benefits, and potential of your new AI tool. As it is part of a new suite of tools it comes with a lot of bias generated from personal experience and news items. Walking your leaders through the implications of AI will allow them to partner and champion your new application.  The National Cyber Security Centre (part of GCSB) released guidance in January 2024 that helps to lay out the best way to approach embedding AI in your organisation. It’s been drafted in collaboration with 10 other country’s security centres to provide a secure way to embrace AI into your IT environment. The general premise is to approach it as you would any cyber security threat:

Many new frameworks are being developed to aid the use of AI throughout a normal development cycle. You can review and adopt the one that best suits your risk profile and flexibility requirements. Weaving these frameworks into your quality checks and overall governance posture will mean you can make informed decisions to get great outcomes with acceptable risks to your organisation.  Can we partner with you for technology advice? If you are thinking of implementing a new AI tool in your organisation, as an application or as part of a process, take time to stop, plan, consider and seek out advice if you have a knowledge gap. Resolution8 can help with

-Louise Mercer [email protected]

0 Comments

|

AUTHORS.

Peter Gilbert is the Director of Resolution8 and has a passion for good project delivery. ARCHIVES.

May 2024

CATEGORIES. |

RSS Feed

RSS Feed